Weeknotes 2024-07-22

,I think we all learned a valuable lesson from this: Never ship. --Tyler Hillsman

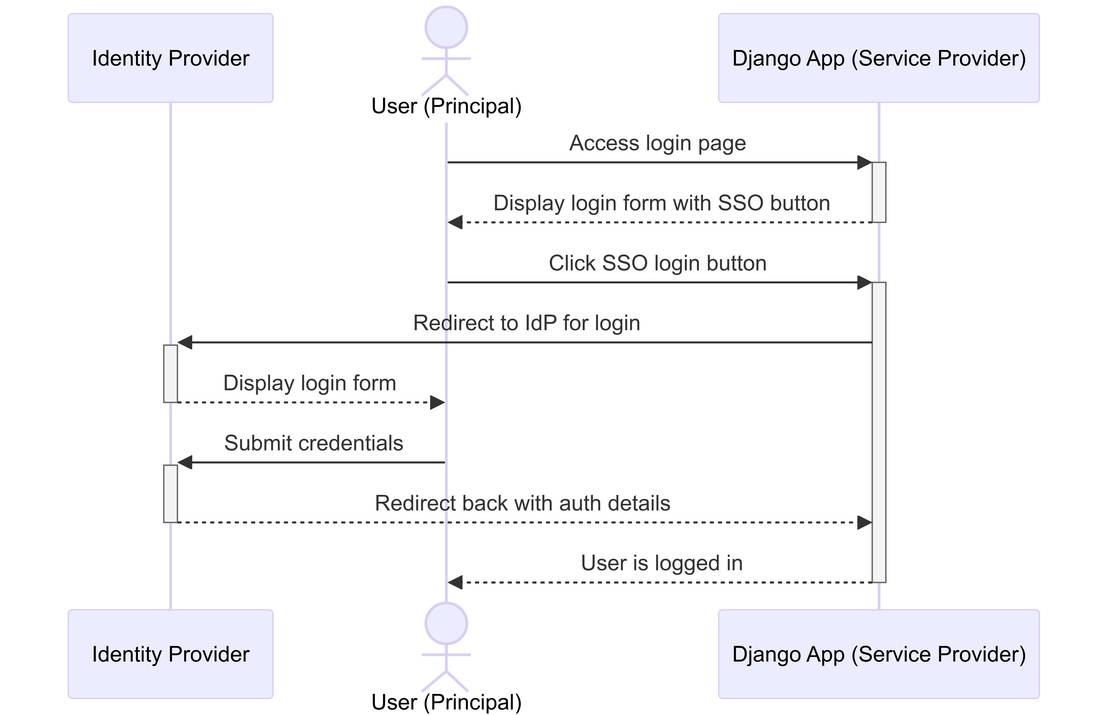

With vacation just around the corner, work has been pretty light. I attended the Django Cologne Meetup and watched an interesting talk about Django background tasks. It’s great to think about not having to deal with Celery anymore. I also recorded and published a podcast episode on the Python Data Model. Then, I wrote a piece on implementing Django with SSO and managed to release a new version of django-cast (though there aren’t many updates).

I encountered a strange issue where some command line tools written in Rust (bat, exa) stopped working, showing error messages like this:

❯ bat Procfile

dyld[89933]: Library not loaded: /opt/homebrew/opt/libgit2@1.7/lib/libgit2.1.7.dylib

Referenced from: <968B81E5-4BAB-323C-8FD5-1BFB54F3052D> /opt/homebrew/Cellar/bat/0.24.0_1/bin/bat

Reason: tried: '/opt/homebrew/opt/libgit2@1.7/lib/libgit2.1.7.dylib' (no such file), '/System/Volumes/Preboot/Cryptexes/OS/opt/homebrew/opt/libgit2@1.7/lib/libgit2.1.7.dylib' (no such file), '/opt/homebrew/opt/libgit2@1.7/lib/libgit2.1.7.dylib' (no such file), '/opt/homebrew/Cellar/libgit2/1.8.1/lib/libgit2.1.7.dylib' (no such file), '/System/Volumes/Preboot/Cryptexes/OS/opt/homebrew/Cellar/libgit2/1.8.1/lib/libgit2.1.7.dylib' (no such file), '/opt/homebrew/Cellar/libgit2/1.8.1/lib/libgit2.1.7.dylib' (no such file)

fish: Job 1, 'bat Procfile' terminated by signal SIGABRT (Abort)

Calling brew reinstall bat fixed it.

Articles

- Microsoft Research Introduces AgentInstruct: A Multi-Agent Workflow Framework for Enhancing Synthetic Data Quality and Diversity in AI Model Training | A single GPU model outperforming GPT3.5? Cool!

- Imitation Intelligence, my keynote for PyCon US 2024

- Friday Deploy Freezes Are Exactly Like Murdering Puppies | Seems about the right time to repost this

- The PSF Board Election Results For 2024: What I Think

- Physics Simulations in Bevy

- Mining JIT traces for missing optimizations with Z3

Videos

- I tried using AI. It scared me. | Hmm, it does not scare me. And Napster also didn't. I'm always happy about new stuff you can use to do cool stuff.

- DjangoCon Europe 2024 | Fast on my machine: How to debug slow requests in production

- DjangoCon Europe 2024 | Data Oriented Django Deux

Software

- aseprite - Animated Sprite Editor

- django-readers - A lightweight function-oriented toolkit for better organisation of business logic and efficient selection and projection of data in Django projects

- Zettlr - Your One-Stop Publication Workbench

Fediverse

- When I worked at a cloud infrastructure company I evaluated some “endpoint management” security agent software | Thread on this crowdstrike disaster

- Any sufficiently advanced "endpoint protection" software is indistinguishable from malware.

Weeknotes

- Weeklog for Week 28: July 08 to July 14 | Johannes

- Weeknotes: GPT-4o mini, LLM 0.15, sqlite-utils 3.37 and building a staging environment | Simon Willison

- Months in Review | Luis 😄